Introduction

At MacPaw, we place a lot of emphasis on user interfaces and innovation. We believe that the way the product communicates and presents itself to the user is one of the fundamental components of its success and applicability in the real world. The faster and easier the service solves the user's problem, and the clearer the service looks, the more likely the service will become a habit.

Interfaces

Human–computer interaction (HCI) research in the design and the use of computer technology focuses on the interfaces between people (users) and computers. HCI researchers observe how humans interact with computers and design technologies that allow humans to interact with computers in novel ways.

Humans interact with computers in many ways, and the interface between the two is crucial to facilitating this interaction. HCI is also sometimes termed human-machine interaction (HMI), man-machine interaction (MMI), or computer-human interaction (CHI). Desktop applications, internet browsers, handheld computers, and computer kiosks use today's prevalent graphical user interfaces (GUIs). Voice user interfaces (VUI) are used for speech recognition and synthesizing systems, and the emerging multi-modal user interfaces allow humans to engage with embodied character agents in a way that cannot be achieved with other interface paradigms. The growth in the human-computer interaction field has led to an increase in the quality of interaction and resulted in many new areas of research beyond it. Instead of designing regular interfaces, the different research directions explore the concepts of multimodality over unimodality, intelligent adaptive interfaces over command- and action-based ones, and active interfaces over passive ones.

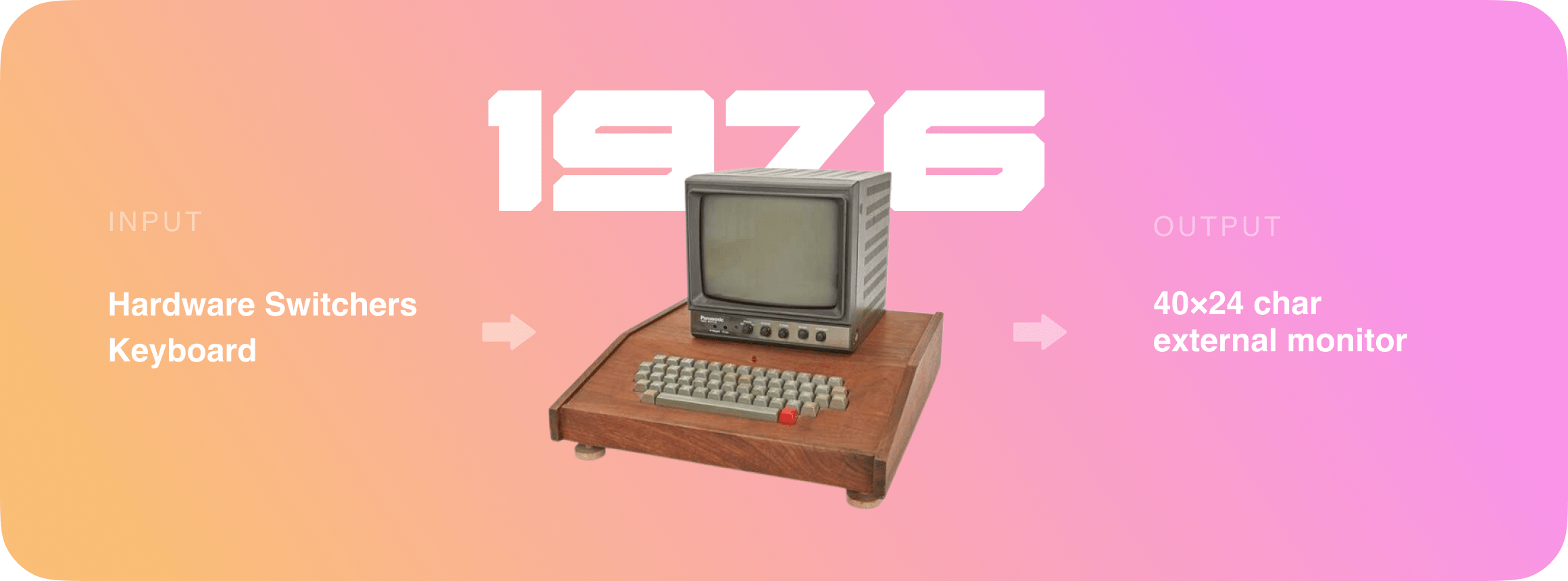

The interaction between users and computers has also changed with the development of technologies and interfaces. If initially keyboards were used for input, and tapes and punch cards were used for output, then with technological progress, we got a large variety of input and output devices.

Let's look at a brief comparison of how HCI evolved through time.

Artificial reality, virtual reality

AR/VR devices have become another step in the evolution of I/O devices and user interaction with a computer.

Augmented reality (AR) is an interactive experience of a real-world environment where the objects in the real world are enhanced by computer-generated perceptual information, sometimes across multiple sensory modalities, including visual, auditory, haptic, somatosensory, and olfactory.

Virtual reality (VR) is a simulated experience similar to or completely different from the real world. Applications of virtual reality include entertainment (video games), education (medical or military training), and business (virtual meetings). Other types of VR-style technology include augmented reality and mixed reality, sometimes referred to as extended reality (XR).

Augmented reality (AR) and virtual reality (VR) technology originated in 1838 when Charles Wheatstone invented the stereoscope. This technology uses an image for each eye to create a 3D image for the viewer. Since then, this technology has evolved rapidly but has remained niche. However, as graphics and computing technologies have evolved in the past few years, AR and VR have experienced a renaissance.

Consumer headsets like the Oculus Rift and the HTC Vive are helping gamers and designers reimagine their interfaces. In the same way, businesses are using these technologies to train employees and market new products. Currently, they are widely used in medicine, science, entertainment, games, etc.

Apple glasses

Based on patent filings, Apple has been exploring virtual reality and augmented reality technologies for more than 10 years, but with virtual and augmented reality exploding in popularity with the launch of ARKit, Apple's dabbling is growing more serious and could lead to an actual dedicated AR/VR product in the not too distant future.

Apple is rumored to have a secret research unit with hundreds of employees working on AR and VR and exploring ways to use the emerging technologies in future Apple products. VR/AR hiring has ramped up over the last several years, and Apple has acquired multiple AR/VR companies as it furthers its work in the AR/VR space.

Former Apple hardware engineering chief Dan Riccio in January 2021 transitioned to a new role, where he is overseeing Apple's work on an AR/VR headset. The project has faced development challenges, and Apple execs believe that Riccio's focus may help. Though he oversees the overall project, Mike Rockwell continues to lead the day-to-day efforts. Apple is also rumored to be working on at least two AR projects, including an augmented reality headset set to be released right around 2022, followed by a sleeker pair of augmented reality glasses coming later. Many rumors have focused solely on the glasses, leading to some confusion about Apple's plans, but it appears the headset will be the first product launched.

realityOS

2022 brings more evidence that Apple is working on some AR/VR technologies.

There were different leaks that independent researchers have detected.

Apple has accidentally force-pushed TARGET_FEATURE_REALITYOS macros to the oss-distribution repository and removed it eventually.

The same thing happened before with bridgeOS.

Later this year, the com.apple.platform.realityos key was spotted in the App Store application logs.

Also, realityOS trademark has been registered by Realityo System LLC, which points to "Corporation Trust Center" — a real company that provides trademark services and of which Apple is a client.

WWDC22

Although realityOS was not presented at WWDC22, some user interface parts of the updated operating systems have changed: macOS and iOS 16 got Stage Manager.

Stage Manager is an entirely new multitasking experience that automatically organizes apps and windows, making it quick and easy to switch between tasks. For the first time on iPad, users can create overlapping windows of different sizes in a single view, drag and drop windows from the side, or open apps from the Dock to create groups of apps for faster, more flexible multitasking.

This feature brought back 3D transitions for their groups that could lead to the future of realityOS and AR/VR interfaces.

Use case: filesystem visualization

Given the growing interest in AR/VR technologies and the potential release of the headset from Apple, our goal is to be ready for this event and validate to which extent, with the current set of tools, it is possible to create AR interaction interfaces and how comfortable they are for the user. Since we have extensive experience working with the file system, we decided to take the visualization of computer disk space using AR technologies as a test task.

Our goal is to create a PoC application that will make it possible to visualize a MacBook file system using AR. This task consists of 4 key parts.

There is no Apple AR headset yet

At this point, Apple still hasn't unveiled a prototype of Apple Glasses, and there's no guarantee it will ever happen. Existing AR headsets do not have native integration with the macOS SDK, and the implementation will be different for each platform, which is inconvenient. Nevertheless, Apple puts a lot of effort into developing AR/VR technologies on its iOS devices. It provides a set of APIs for implementing AR, in particular, these:

Configuring horizontal planes tracking with ARKit:

func setUpSceneView() {

let configuration = ARWorldTrackingConfiguration()

configuration.planeDetection = .horizontal

if ARWorldTrackingConfiguration.supportsFrameSemantics(.personSegmentationWithDepth) {

configuration.frameSemantics.insert(.personSegmentationWithDepth)

}

sceneView.session.delegate = self

sceneView.session.run(configuration)

sceneView.delegate = self

}

Placing a folder node on a plain:

class FolderNode: FileNode {

override init(file: File) {

let geometry = SCNBox(width: 0.1, height: 0.1, length: 0.1, chamferRadius: 0.005)

let material = SCNMaterial()

material.locksAmbientWithDiffuse = true

material.isDoubleSided = false

material.ambient.contents = UIColor.red

geometry.materials = [material]

super.init(file: file)

}

}

func addFileNode(with file: File, to planeNode: SCNNode, at position: SCNVector3? = nil) {

let node = nodesBuilder.makeFileNode(with: file)

node.position = position ?? sceneView.convenientCenterPointToPlaceAnObject(for: planeNode)

sceneView.scene.rootNode.addChildNode(node)

}

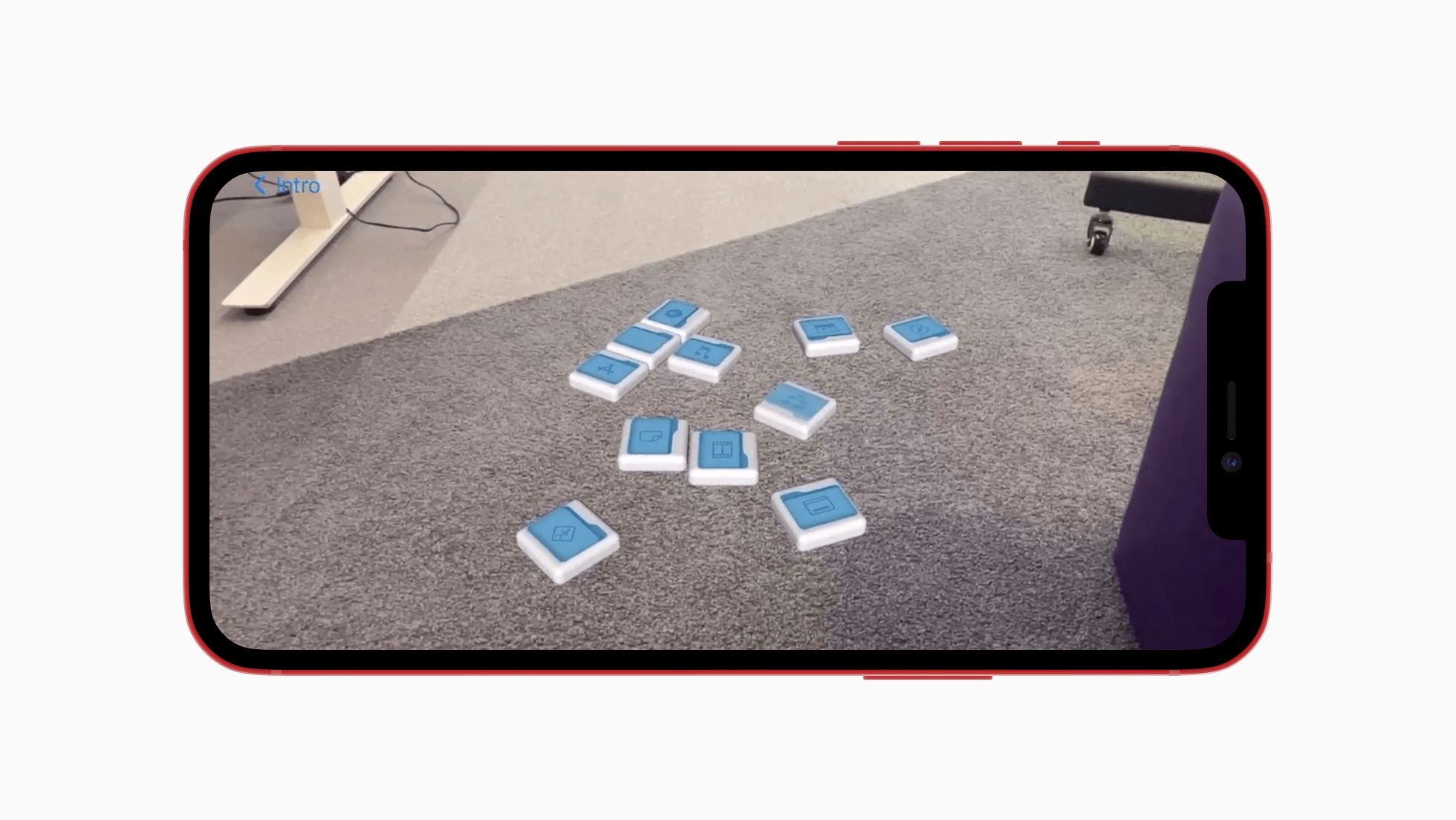

As a result, there is a possibility to place SceneKit objects on a plane:

Based on this, these technologies will likely be used in a future prototype. Thus, Apple gives us a hint that using the current technology on the market, we can already simulate Apple's AR headset using any iOS device that supports the technologies from the above.

Handling user input in AR

User input in AR/VR is entirely different from that we have on touch- and keyboard-based devices. To navigate within the disk structure, we will use recognized hand gestures by implementing hand pose detection by the Vision framework:

- Finger-point gesture: access a file or a folder

- Swipe down gesture: dismiss the details

final class HandTracking {

private let videoProcessingQueue = DispatchQueue(label: "HandsTrackingQueue", qos: .userInitiated)

private let handPoseRequest = VNDetectHumanHandPoseRequest()

func processPixelBuffer(pixelBuffer: CVPixelBuffer, completion: @escaping (CGPoint) -> Void) {

self.videoProcessingQueue.async {

let orientation = CGImagePropertyOrientation(UIDevice.current.orientation)

let handler = VNImageRequestHandler(cvPixelBuffer: pixelBuffer, options: [:])

do {

// Perform VNDetectHumanHandPoseRequest

try handler.perform([self.handPoseRequest])

// Continue only when a hand was detected in the frame.

// Since we set the maximumHandCount property of the request to 1, there will be at most one observation.

guard let observation = self.handPoseRequest.results?.first else {

return

}

// Get points for thumb and index finger.

let thumbPoints = try observation.recognizedPoints(.thumb)

let indexFingerPoints = try observation.recognizedPoints(.indexFinger)

// Look for tip points.

guard let thumbTipPoint = thumbPoints[.thumbTip], let indexTipPoint = indexFingerPoints[.indexTip] else {

return

}

// Ignore low confidence points.

guard thumbTipPoint.confidence > 0.3 && indexTipPoint.confidence > 0.3 else {

return

}

DispatchQueue.main.async {

completion(CGPoint(x: indexTipPoint.location.x, y: indexTipPoint.location.y))

}

} catch {

print(error)

}

}

}

}

Our implementation in action:

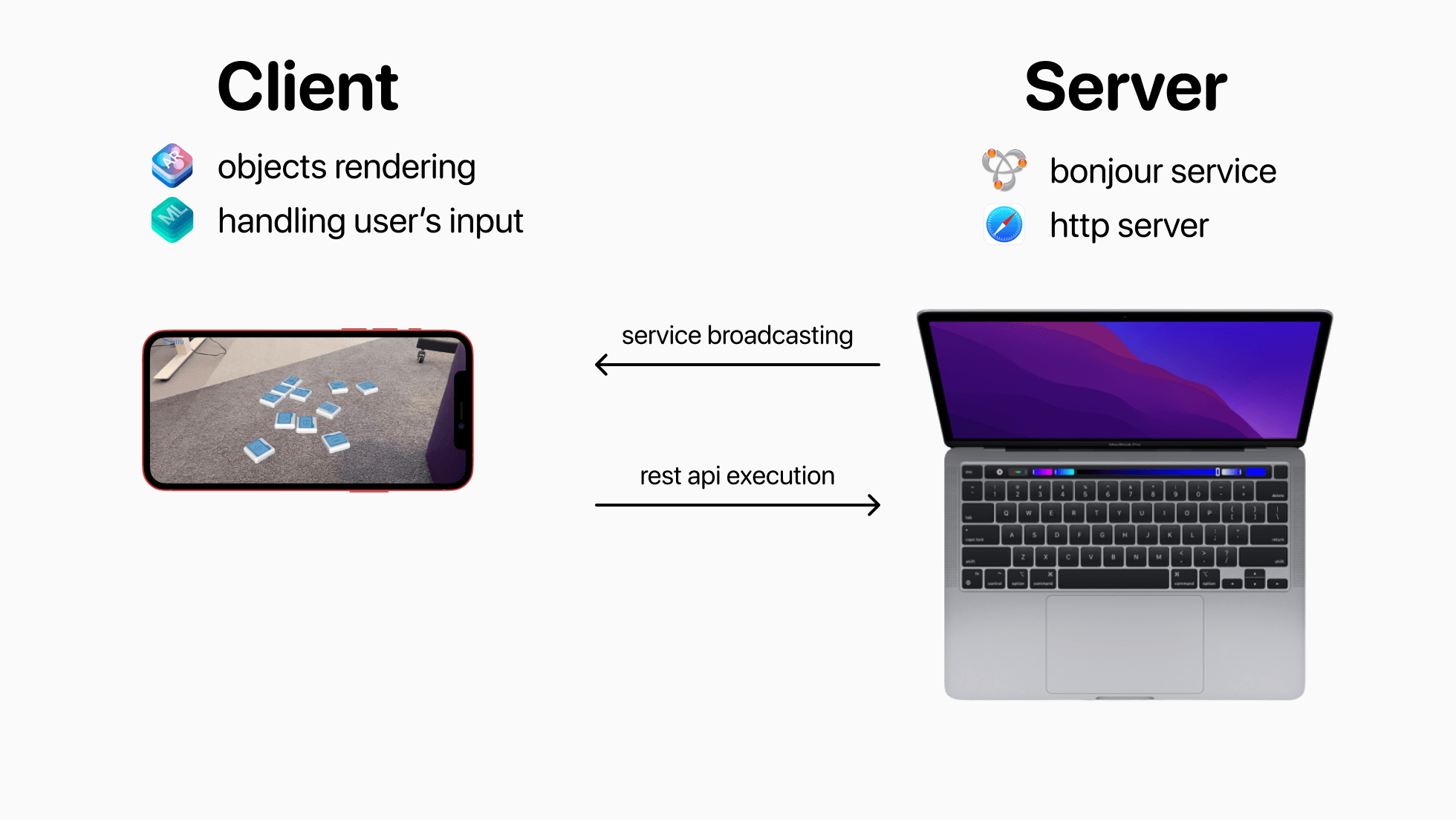

Connecting a Mac to a device that doesn't exist

The next step is to link the AR device to a Mac.

Since we have no idea about the future protocol, we currently use the technology available. For device discovery purposes, Apple has a technology called Bonjour, which works on the "broadcast" principle. A Mac, in our case, will act as a broadcaster and publish a service with a deterministic name to the local network. Knowing the name of the service, our AR headset will be able to conduct a lookup and, if a service is found, will know the name of the server in the local network to implement communication using a standardized API — this will be enough for the connection to happen. We implement a classic client-server architecture where the computer acts as a server and the AR headset as a client.

A quote from the official Bonjour documentation:

Bonjour, also known as zero-configuration networking, enables automatic discovery of devices and services on a local network using industry-standard IP protocols. Bonjour makes it easy to discover, publish, and resolve network services with a sophisticated, easy-to-use programming interface that is accessible from Cocoa, Ruby, Python, and other languages.

Publish a service (server-side):

let netService = NetService(domain: domain, type: type, name: name)

netService.delegate = self

netService.publish()

Lookup for a service (client side):

final class DiskoverseFinder: NSObject {

var onFindDiskoverse: ((Diskoverse) -> Void)?

var onDiskoverseDisappeared: ((String) -> Void)?

private let browser = NetServiceBrowser()

func findDiskoverse() {

self.browser.delegate = self

self.browser.searchForServices(ofType: "_mo_broadcasting_protocol._tcp.", inDomain: "")

}

}

extension DiskoverseFinder: NetServiceBrowserDelegate {

func netServiceBrowser(_ browser: NetServiceBrowser, didFind service: NetService, moreComing: Bool) {

onFindDiskoverse?(Diskoverse(name: service.name))

}

func netServiceBrowser(_ browser: NetServiceBrowser, didRemove service: NetService, moreComing: Bool) {

onDiskoverseDisappeared?(service.name)

}

}

Streaming data and service API from a Mac to an AR device and vice versa

Once we address the discovery problem, we need a standardized API that allows the AR device and the computer to transfer data to each other. An important concept here is that the whole service should be "app-context" and not "machine-context." In other words, the AR device would have access only to what the server provides, not the computer.

As a server part that can provide an interface in the form of an API, we use an HTTP server that will work on the local network. We can use the REST API for communication once the service is started.

//Lists files

server["/list"] = {...}

//Returns file path for details

server["/file/:path"] = {...}

//Go backwards in file hierarchy

server["/backward"] = {...}

//Returns file's thumbnail path

server["/thumbnail/:path"] = {...}

//Change hierarchy pointer

server["/change/:path"] = {...}

macOS (server part) could be launched with no UI, like a service.

Components diagram

- macOS (server) starts an HTTP service for REST API availability

- macOS (server) starts Bonjour service broadcasting for discoverability

- iOS (client) locates the service and executes the REST API to get the file system information

- iOS (client) handles the user's input and notifies the server via REST API that the default folder has been changed and new content is required

Conclusion

The study has shown that with a set of existing technologies available on Apple platforms, it is possible to simulate AR wearable headsets to implement prototypes. iOS devices with the support of ARKit and Vision could be used as simulators.

Demo

Links

This is an independent publication, and it has not been authorized, sponsored, or otherwise approved by Apple Inc.